To be human is to be biased.

From the route you take home to where you choose to go for lunch, we have our own inherent preferences that predispose us to a particular decision.

And while we assume that our choices are rational, we know that isn’t always the case. Sometimes we can’t explain why we prefer one option over another.

Though that might be acceptable when it comes to choosing between a Coke and a Pepsi, it is exponentially different when we are asking an artificial intelligence (AI) system to make judgements and predictions on something of significant human consequence.

Bias persists in every corner of our society, so it should be no surprise that we see it in AI.

More than 50 years ago, computer scientist Melvin Conway observed that how organizations were structured would have a strong impact on any systems they created. This has become known as Conway’s Law and it holds true for AI. The values of the people developing the systems are not just strongly entrenched, but also concentrated.

In Canada, AI talent and expertise is concentrated in three cities: Edmonton, Toronto, and Montreal. Each city has a leading AI pioneer who people and funding have coalesced around.

Economically, this concentration has led to greater competitiveness and served as a magnet to attract and retain the best AI talent.

And it’s working. At the Canadian Institute for Advanced Research (CIFAR), over half of their 46 AI research chairs were recruited to Canada. But with this concentration also comes challenges that Canada and other global AI hubs are struggling with.

These AI tribes are overwhelmingly homogenous, futurist and author Amy Webb has found. They all attend the same universities, are affluent and highly educated, and are mostly male.

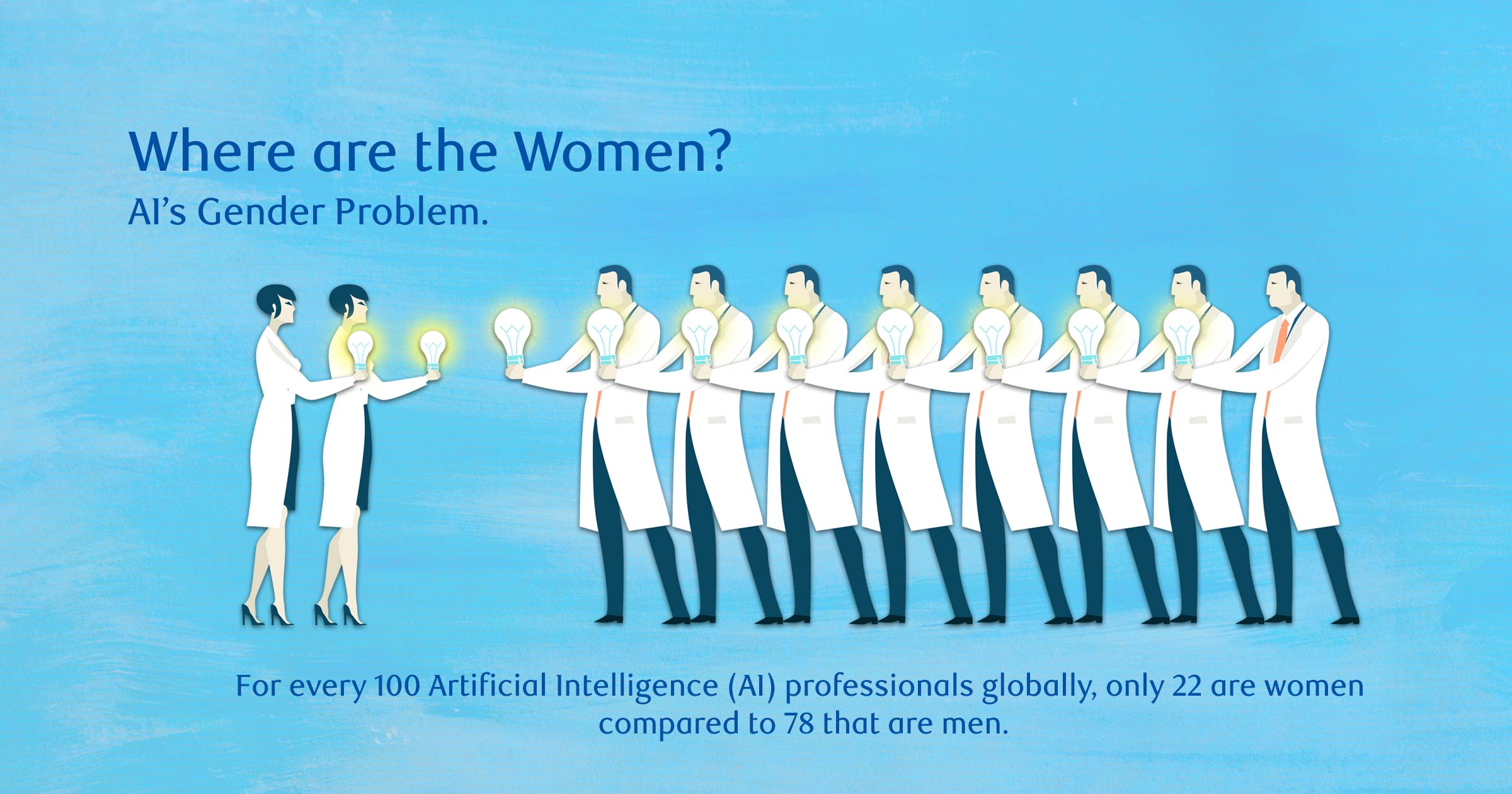

This finding was reinforced by the World Economic Forum’s most recent Global Gender Gap Report, which found only 22% of AI professionals globally are female. In Canada, it is marginally better where 24% are female.

The gender imbalance is worse when you look at who is studying AI. For every woman researching AI, an Element AI study found there were on average nine men.

But the nature of how we develop AI is that it learns what the data shows them and inherits its creators’ unconscious biases.

We see AI’s gender imbalance reflected in the systems being developed. After a visual recognition system was trained on a gender biased data set, which associated the activity of cooking 33% more often with women, the trained model amplified this disparity to 68%.

These tribes are also overwhelmingly white.

Dubbed a “Diversity Disaster” in the AI Now Institute’s report released last month, only 2.5% of Google’s workforce is black, Facebook and Microsoft are each at 4%, while data on LGBTQ participation in AI is non-existent.

As a multicultural society, the lack of diversity in AI should trouble all Canadians.

From setting insurance rates to policing, health care, student admissions, and more, every day this issue becomes more urgent as AI is increasingly integrated into society.

In the next few years, Gartner predicts that 85% of AI projects will deliver erroneous outcomes due to bias in data, algorithms, or the teams responsible for managing them.

We cannot afford to tip toe around the uncomfortable conversations of sexism, racism, and unconscious bias, and how they are making their way into AI.

Suppose a medical company wants to develop an AI system to find the best treatment plan for patients. To do so, they must determine their objective. Is it to minimize the cost of treatment? Or is it to maximize patient outcomes?

If the goal is to minimize costs, the algorithm could decide an effective way to achieve its objective is to recommend less effective treatments based on a cost-benefit analysis of probability of success to cost.

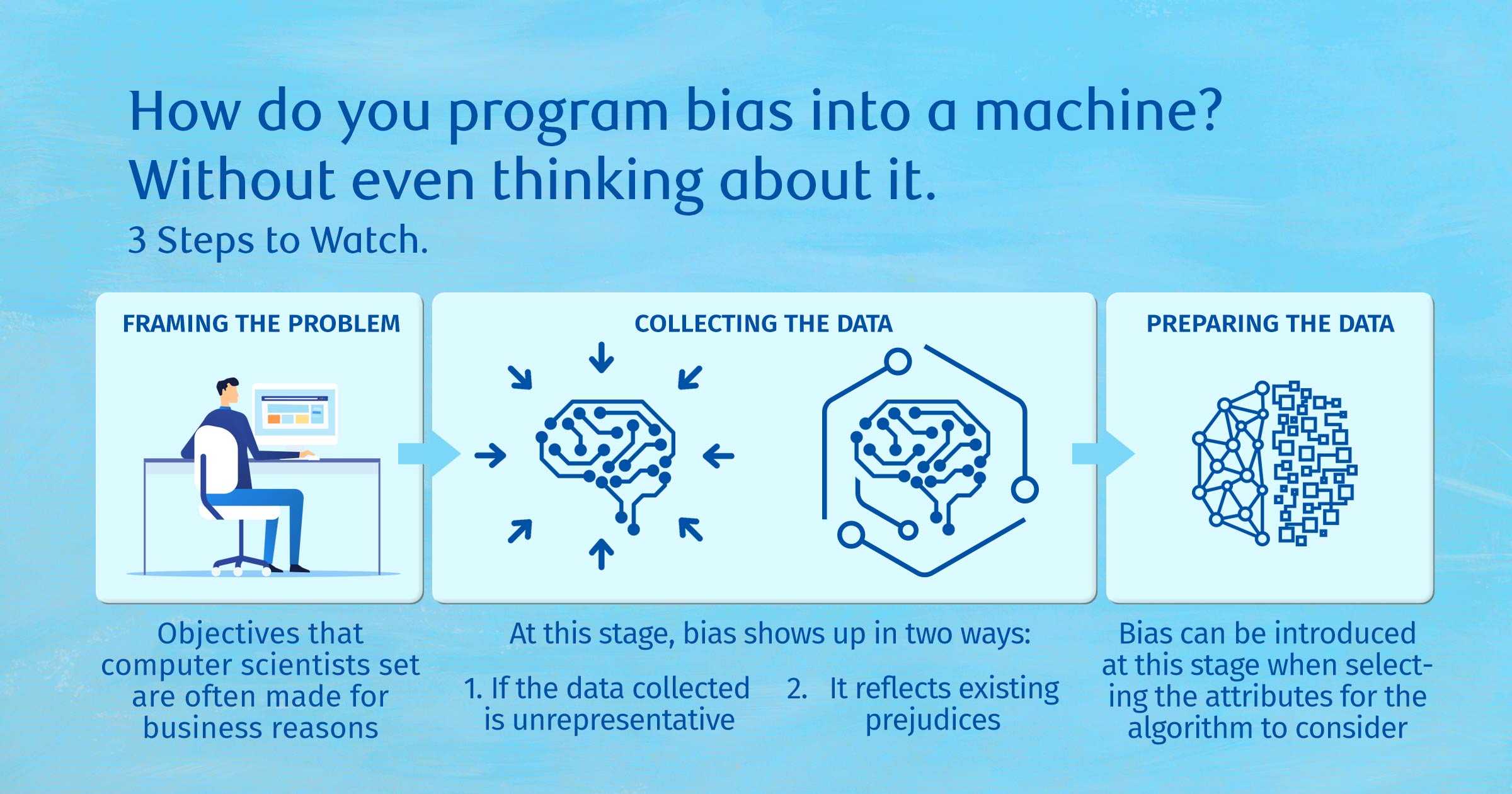

When organizations are setting objectives, whether for personalized medicine or another application, these decisions are often made for business reasons, rather than fairness or discrimination.

Bias can also be introduced through how the data is collected. If the data collected is unrepresentative or reflects existing prejudices, you could intentionally avoid race as an explicit input and still introduce racial bias.

For example, research from United Way Greater Toronto found inequality and race in the Toronto region increasingly divided by postal code. An organization could well intentionally avoid race, and still have a fundamentally flawed output if it used postal code as an input.

Yet, not all of what AI systems output is easily explainable to us. Businesses want systems with the most accurate predictions, but it’s those models that are also the most complex and least understood.

From the songs suggested to us to the ads we see, even the engineers who built these systems cannot fully explain how they arrive at those recommendations. And while that may be okay when it comes to your Spotify playlist recommendations, how comfortable would you be taking medical advice from an AI?

This is the challenge that researchers at Deep Patient faced. Trained on data from 700,000 patients, the AI system was making what turned out to be very good predictions of disease, including early stage liver cancer, but the researchers could not explain how it arrived at these conclusions.

Without explainability, how comfortable would a medical team be in acting on these predictions? Would they be willing to change medication, administer chemotherapy, or go in for surgery?

The issue is not AI, but how we build it.

We must recognize society’s deep social issues captured in human data, and root them out before it becomes encoded. Currently,

bias is often an after-thought – a problem to be solved at the end of the development cycle.

Instead, non-technical sociological experts need to be incorporated throughout the development cycle to work side-by-side with those doing the coding.

We must treat AI systems as we would a new pharmaceutical drug, and ensure it undergoes rigorous testing to understand potential side effects and identify any biases that could impact its output prior to release.

Nor should the evaluation cease after release. AI systems should be continuously monitored and regularly re-evaluated across their lifecycle. A health study on the half-life of data suggests that clinical data has a short window before its usefulness radically declines. After only four months, an AI system could be making decisions using outdated data with serious human consequences.

But despite the fear of AI and its impact on the future of humans, every possible solution to mitigating bias and improving AI requires human intervention.

A symbiotic human-AI relationship is the concept behind human-in-the-loop (HITL) systems. A technical term for a class of systems that are not fully autonomous, HITL is used where there is a low level of confidence, and the cost of errors is high, such as in health care.

Whenever there is low certainty, HITL systems will automatically loop in humans. This is critical for health care where practitioners are interacting with the output, engaging them in the process enhances explainability and provides comfort to act on the resulting output.

What’s clear is that humanity must be at the core of AI development.

Launched in December 2018, the Montreal Declaration for Responsible Development of Artificial Intelligence developed an ethical framework that calls for AI guidelines to ensure it “adheres to our human values and brings true social progress.” It has been signed by 45 organizations and 1,394 citizens.

Earlier this year, the Council of Europe adopted their own declaration on artificial intelligence, which calls on states to ensure that “equality and dignity of all humans as independent moral agents” in an AI-driven world.

In the United States, lawmakers have introduced the Algorithmic Accountability Act, which will require organizations with revenue over $50 million per year, who hold information on at least one million people or devices, or primarily act as data brokers that buy and sell consumer data to audit their systems for bias.

But who will keep the signatories accountable? If it is a role that will be undertaken by government, who provides oversight over how governments use AI? What happens when governments disagree on how AI can or should be deployed?

There are two prevalent world views for AI use. One that is predominantly Silicon Valley-driven is focused on consumerism and value-creation. The other perspective comes from the other side of the Pacific, where the Chinese government views AI as an instrument for social governance.

In the face of these questions and challenges exists an opportunity for Canada to lead the way as a global leader in advancing AI for good. This was one of our 10 recommendations to make Canada AI-ready.

Though Canada was the first country with a national AI strategy, we have fallen behind in capitalizing on its application and commercialization. An ‘AI for Good’ strategy alongside Canada’s AI supercluster, and a highly anticipated national data strategy, could enhance Canada’s competitiveness as a global AI hub and help us to reclaim our position as a driving leader in artificial intelligence.

The question is whether we are willing to ask the hard questions.

AI is holding up a mirror to society and forcing us to confront the polite fictions that we tell ourselves. We can no longer afford to look away.

Listen to our conversation on the RBC Disruptors podcast about the potential of artificial intelligence.

Listen on Apple Podcasts, Google Podcasts, Spotify or Simplecast

As Senior Vice-President, Office of the CEO, John advises the executive leadership on emerging trends in Canada’s economy, providing insights grounded in his travels across the country and around the world. His work focuses on technological change and innovation, examining how to successfully navigate the new economy so more people can thrive in the age of disruption. Prior to joining RBC, John spent nearly 25 years at the Globe and Mail, where he served as editor-in-chief, editor of Report on Business, and a foreign correspondent in New Delhi, India. Having interviewed a range of prominent world leaders and figures, including Vladimir Putin, Kofi Annan, and Benazir Bhutto, he possesses a deep understanding of national and international affairs. In the community, John serves as a Senior Fellow at the Munk School of Global Affairs, C.D. Howe Institute and is a member of the advisory council for both the Wilson Center’s Canada Institute and the Canadian International Council. John is the author of four books: Out of Poverty, Timbit Nation, and Mass Disruption: Thirty Years on the Front Lines of a Media Revolution and Planet Canada: How Our Expats Are Shaping the Future.

This article is intended as general information only and is not to be relied upon as constituting legal, financial or other professional advice. A professional advisor should be consulted regarding your specific situation. Information presented is believed to be factual and up-to-date but we do not guarantee its accuracy and it should not be regarded as a complete analysis of the subjects discussed. All expressions of opinion reflect the judgment of the authors as of the date of publication and are subject to change. No endorsement of any third parties or their advice, opinions, information, products or services is expressly given or implied by Royal Bank of Canada or any of its affiliates.

Learn More

Learn More